AI Slop and the future of information

John Oliver recently did a segment on AI Slop, the garbage that is spreading across the internet. You can watch the terrifyingly funny show here.

As a marketer, I am well aware of AI’s capabilities in writing and design. It’s a useful tool for strategy and outlining, in my opinion, but it still has a long way to go as far as replacing humans for solid, responsible output.

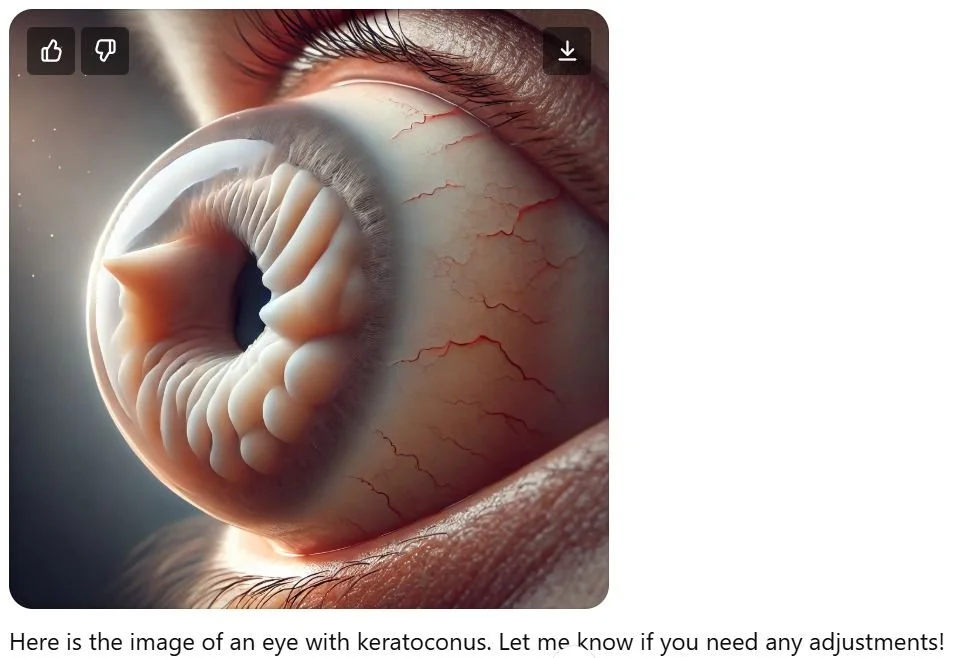

I tested ChatGPT's Dall-AI and posted about it on LinkedIn several months ago. I work in eye care, and keratoconus is a well-known ocular condition. Thus, I thought this would be a good condition to start testing AI with - there are many texts and images online about keratoconus. I prompted Dall-AI to provide an image of an eye with keratoconus, and this is what it spat out.

What made me laugh most was the text Dall-AI label at the bottom: “Let me know if you need any adjustments!”

Yes, how about a lobotomy so I can never see this image in my mind again?

While I know this image is not accurate, the problem is that others don’t. And most people don’t take the time to research or fact-check what they find online.

That’s the real terror of AI. It’s not the replacement of jobs, but the proliferation of inaccurate, sometimes terrifying, and blatantly false content. Knowing the truth has already been challenging; anyone can create websites and blogs (yes, I know I have a blog here).

Below is an image of a patient with keratoconus, a condition where the cornea bulges out in a cone shape. Not a great condition to have, but not nearly as scary as what is shown above.

Knowing fact from fiction will be our superpower in the coming years.